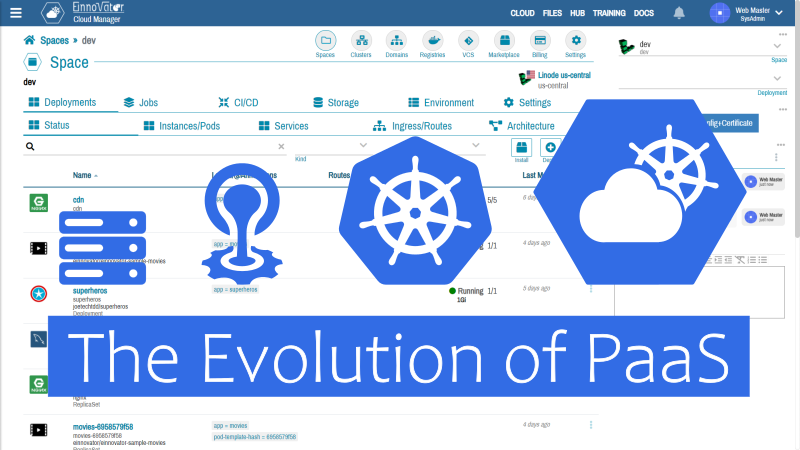

The Evolution of PaaS

Cloud Manager, a new Multicloud Platform built on Kubernetes ArticleThe Evolution of PaaS: Cloud Manager, a new Multicloud Platform built on Kubernetes

The quest to find the right kind of Paas — Platform as a Service — has for long been the holy-grail of cloud computing. We claim that achieving this goal is finally in reach, and now it’s not the time to let go.

Multiple generations of technology have passed by which, by half-ingenuity half-trial&error, have increasingly simplified the process of deploying and managing applications and services running in compute clusters — be it in a remote cloud or on-premises. Two of the most recent attempts to create a general purpose PaaS — Cloud Foundry and Kubernetes — have come very close to realize the ultimate dream. However, by choices made in design or focus, both have issues and limitations of their own and there is room for improvement. Lessons can be learned from the experiences gathered in building and using these platforms, and prospects for combining the best of the two are on the raise. In the following, after some motivation and background, I preview our approach in building a better PaaS — as embodied in Cloud Manager. It is an abstraction layer on top of Kubernetes, but designed from the ground-up for multi-cluster/multi-cloud devops, simplicity, and with both developers and operations in mind. It takes bits of inspiration from Cloud Foundry when credit in due, aiming for uncluttered workflows, while still retaining all the power and non-paternalist viewpoint of Kubernetes.

Disclosure: A shorter version of this article was originally posted in TheNewStack.io titled Cloud Manager, a new Multicloud PaaS Platform built on Kubernetes.

From VMs to Containers — The Innovators’ Dilemma

VMs (Virtual Machines) were a great step forward in the history of computing, and are among the set of technologies that ultimately enabled the cloud computing paradigm-shift. But while VMs were once considered a high-level and light-weighted abstraction, by the relative nature of these qualifiers, they are now considered a bulky approach to package and deploy software. Software containers, popularized by Docker, were a major improvement in this regard. And it become clear soon enough, that containers where to be at the core of the search for a better PaaS.

Cloud Foundry was an early adopter of containers but only half-hearted. While application workloads run as containers, they are only optionally bundled as such. Buildpacks – a set of scripts used to build a stack at deployment time – remained the better supported and preferred approach. But, in hindsight and when considering all the factors, containers are a more versatile approach to software bundling and distribution. Thus, containers threatened Buildpacks from the start in moving them to the shelf of “deprecated” technologies. Most damaging, Cloud Foundry retained a VM based architecture for many of the low level components. This made the platform considerable heavy-weighted and complex to deploy, and found resistance in wining main-stream adoption by the dev-base and second-tier and below organizations. Cloud Foundry abstractions and management model has the benefit of simplicity, but achieves this at the expense of generality. Stateless applications are treated as a first-class citizens, while stateful services such as DBs and message-brokers are delegate to the periphery and externalized via ad-hoc case-by-case implementations of service brokers.

Kubernetes takes a more general and principled approach to container orchestration. All workloads are bundled in container images and deployed as such. This includes Kubernetes own system components. The internal architecture is also arguably simpler, based on a “blackboard” style interactions (supported by etcd and an API server), control events, and an open and extensible API. Most importantly, stateless and stateful workloads are thread in equal footing. Storage volumes are a native abstraction for Kubernetes. Thus, broadening the scope of use cases for Kubernetes and eliminating the need for ad-hoc service integration extras.

Two Approaches to System Building — The Pure and the Practical

The main trouble with Kubernetes is that generality and practically does not always go hand-in-hand. In fact, in many cases they push in different directions. Kubernetes ambition to be general-purpose, leads to many options and moving parts to consider when deploying workloads, each requiring verbose configuration files. While this can fancy the wits of full-time devops engineers and staff, that rely on boilerplate templates, route learning of multiple command-line tools, script wizardry, and hard-own experience, it to large extent an unnecessary evil. It leaves unattended the good lessons learned in building increasingly better PaaS — reducing the gap between development and operations, enable continuous delivery and high-velocity, and foster architectural design exploration. This can be achieved by providing straightforward ways to accomplish the tasks that developers need to perform regularly. Rather than using a universal and verbose model for configuration, everyday work requires pragmatic execution of the common workflows. UIs were invented long time ago, and not only for end-users. Automation is almost always a good thing. Declarativity in configuration helps. But religious dependency on obscure “do it all” scripts, does not lead to architectural transparency and flexibility of exploration. Eventually, leads to yet another set of organization silos between development, infrastructure, and business. It’s the devops mindset forgotten yet again. Google organizational culture, from where Kubernetes traces its roots, it’s not necessarily something that all want to emulate.

It is not the first time that this tradeoff between practicality and generality plays out. This is often seen in the contrast between dry semi-closed software standards and extensible framework (open-source or not). A good example is Java/JEE set of standards which aim for rigor, non-ambiguity and completeness, often at the expense of pragmatism. In many areas, Spring Framework and Spring Boot build-up incrementally from practical needs and accumulated community input, replaced Java/JEE standards in many areas. The few cases were Java/JEE standards and APIs got major adoption (e.g. JPA, and to some extent CDI), were cases where prior experience paved the way and guided the design of a proper API.

From what we know so far, Kubernetes is a good starting point and solid ground to build a practical PaaS. But more aspects need to be considered, and at least one more iteration is require to simplify developers’ work.

The Cloud Manager Way — Standing on the “Shoulders of Giants”

Multi-Cluster/Multi-Cloud From the Ground-Up

Cloud Manager is a new PaaS that leverages all the power of Kubernetes for managing individual clusters, but it is built from the ground-up to support multiple clusters possibly in multiple clouds. Cloud Manager uses its own database to keep high-level management information about the clusters (e.g. credentials) and other, which facilitate the implementation of many desired features in a multi-cluster PaaS. Cluster access settings can be imported in a variety of ways: by uploading/providing a kubeconfig file, by importing directly from cloud providers (10+ are supported), or manually.

Cloud Manager provides a flat Space listing across the different clusters, which define the namespaces where resources are created. Each Space is mapped directly to a Kubernetes namespace in some cluster, but developers and devops don’t need to keep specifying and switching between clusters. Some inter-cluster operations are also simplified due to this flat virtual Space listing. Namespaces pre-existing in a Kubernetes clusters can be attached to make them a fully managed Space.

No Vendor Lock-In

Cloud Manager is completely unintrusive for Kubernetes underlying clusters. The clusters are completely unaware of Cloud Manager or its management DB. Cloud Manager is also 100% agnostic about the Kubernetes distribution, and does not require the installation of any agent in the cluster. Stopping the use of Cloud Mangager has no affects on the clusters, and there is minimal vendor lock-in.

Parts of Cloud Manager runtime, and support libraries, are available as open-source. Other parts are in consideration/planned to be open-source throughout 2021. We anticipate the evolution to an approach similar to Cloud Foundry, where core components are open-sourced and open to community/partner contributions, while some other components are kept as vendor differentiation IP. Currently, the complete system is available in “freemium” model. It is free to use, but limited in functionally above a certain resource threshold in the free community edition.

Simplified UX/UI

At the UX/UI level, both in the UI and CLI, Cloud Manager borrows some of the best ideas of Cloud Foundry while still giving full access to all Kubernetes resources. Deployments are treated in a more holistic than in plain Kubernetes, by considering several related resources as part of the same application. This includes auto-creation of a matching service and DNS ingresses for each deployment. DNS routes are managed in a simple way and in the likes of Cloud Foundry. Users can define one or more Domains, that specify information such as DNS hostname suffix and wildcard certificate. One or more DNS routes can be added to an application, at startup/creation time or while running. An option specifies if a route should use its own Kubernetes ingress (default) or a shared ingress. Routes can also overwrite or replace Domain level certificates (e.g. if a wildcard certificate is not available). Unlike some high-level tools, Cloud Manager does not introduce a new abstraction for the application. Rather, it uses directly the Kubernetes abstractions be it a (stateless) Deployment or a Statefulset.

Minimal Configuration and Microservices Friendly

Deployment settings are also simplified in a variety of ways. Minimal configuration is required with no need for complex YAML manifest files, for most use cases. Deployment settings are also stored in Cloud Manager management DB, which allows for incremental–interactive configuration. Deployments can be configured before actually deployed in a cluster. This includes adding persistent volume mounts, and environment settings. A variety of utility operations are supported on deployments as well, such as: “copy&paste” of deployments within a Space or between Spaces (possibly in different clusters), and generation/export of manifest files and other formats of reusable packaging. Unlike other tools for Kubernetes (e.g. Rancher, Leans), the use of Kubernetes main command-line tool kubectl is dispensable for most use cases.

Like Cloud Foundry, the concept of service binding is also supported but in a more general way and in complement to Kubernetes native abstractions (e.g. configmaps and secrets). A binding is a group of environment variables that are automatically set from values that depend on the deployment details of other services (e.g. URL and access credentials). Deployments can define connectors, which other deployments can use to resolve bindings. Because there is no different treatment of stateless and stateful deployments, the same mechanism is useful both to connect to backing services and to other microservices. Like Openshift, Cloud Manager can be optionally aware of the stack of a deployment to automatically generate configuration and bindings. But it allows for the definition of custom bindings as well. The usefulness of this is that is makes it easier to configure and deploy non cloud-native apps, and removes the number of use-cases where an external naming/config service is needed for cloud-native apps. While plain Kubernetes has some features to simplify inter-service discovery and connectivity (e.g. space and cluster level DNS names), these mechanisms ignore DNS ingresses and by design can not work across clusters.

CI/CD Support Built-in

Because in Kubernetes all apps need to be packaged as (Docker) images, the creation and distribution of images should be as much at the core of operations, as the actual deployment of them. This implies that CI/CD (Continuous Integration/Delivery) should not be regarded as an after-though in a PaaS or a “nice to have” best-practice in an organization. Cloud Manager as integrated support for CI/CD on-top Tekton. One or more image registries (possibly private) can be defined to both pull images and push images. And one or more GIT VCS (Version Control) should be defined to pull source code from private repositories. CI/CD settings are defined close to the deployment information, which fosters a culture of quick push–build-deploy release cycles. Builds are run usually in the same space and cluster where the deployment is defined. For non-development deployment, the CI/CD settings are left unset. Secure webhooks are also supported to enable automatic builds and (optionally) deployments at commit time (for all or tagged versions only).

Cloud Manager also helps in setting up a cluster and space to support the CI/CD Tekton runtime. Including installation of the runtime, and selected Task and Pipeline definitions from catalogs. All the low-level details of defining Tekton resources to run builds are done automatically by Cloud Manager.

Solutions marketplace.

Cloud Manager also as built-in support for a solutions marketplace. Similar feature exist in many other tools for Kubernetes, but like in Cloud Foundry it is baked in the core architecture and feature-set. Marketplace solutions can be defined via one or more catalogs, defined by an index file (compatible with Helm catalogs). Solutions can also be defined standalone as a “deployment plus settings”, which is useful for developer teams to create short/mid-term reusable solutions without the trouble of creating a catalog or a elaborated definitions like an Helm chart.

Team/Enteprise Extensions

Cloud Manager is designed to be as light-weighted as possible. It runs as a standalone app in single-user mode with a low resource-usage footprint (2Gb memory), or in multi-user/enterprise mode with a side-kick SSO Gateway — as authentication & identity provider. The SSO Gateway enables a variety of features needed for team collaboration, including: account and group management, and invitations. The SSO Gateway is provided as part of an integrated platform, based on a microservices architecture, with includes other optional support services, such as: a user notifications service — to notify collaborating users of events triggered by other, an external file storage service — e.g. for data backup snapshots, and social chat/comments in space dedicated channels. Cloud Manager also takes inspiration from Cloud Foundry in simplifying the Kubernetes security model. A small set of high-level roles (manager, developer, and auditor), that map to a Kubernetes RBAC permissions, provides a more convenient approach to express most security policies. For advanced use-cases, it is always possible to fallback to direct RBAC permission settings.

Getting Started

There are a variety of quick ways to get started with Cloud Manager. It can be run with Docker, or installed in an existing cluster with Helm. Installing Cloud Manager in single-user mode as a complement to Docker Desktop, is a popular approach. Cloud Manager is also available ready-to-install in the Kubernetes marketplace of several cloud providers. The resources listed below provide detailed “getting started” instructions.

Cloud Manager preferred way of interaction by most developers is via a web UI. A command-line tools is also available to enable script-based automation of tasks.

Comments and Discussion